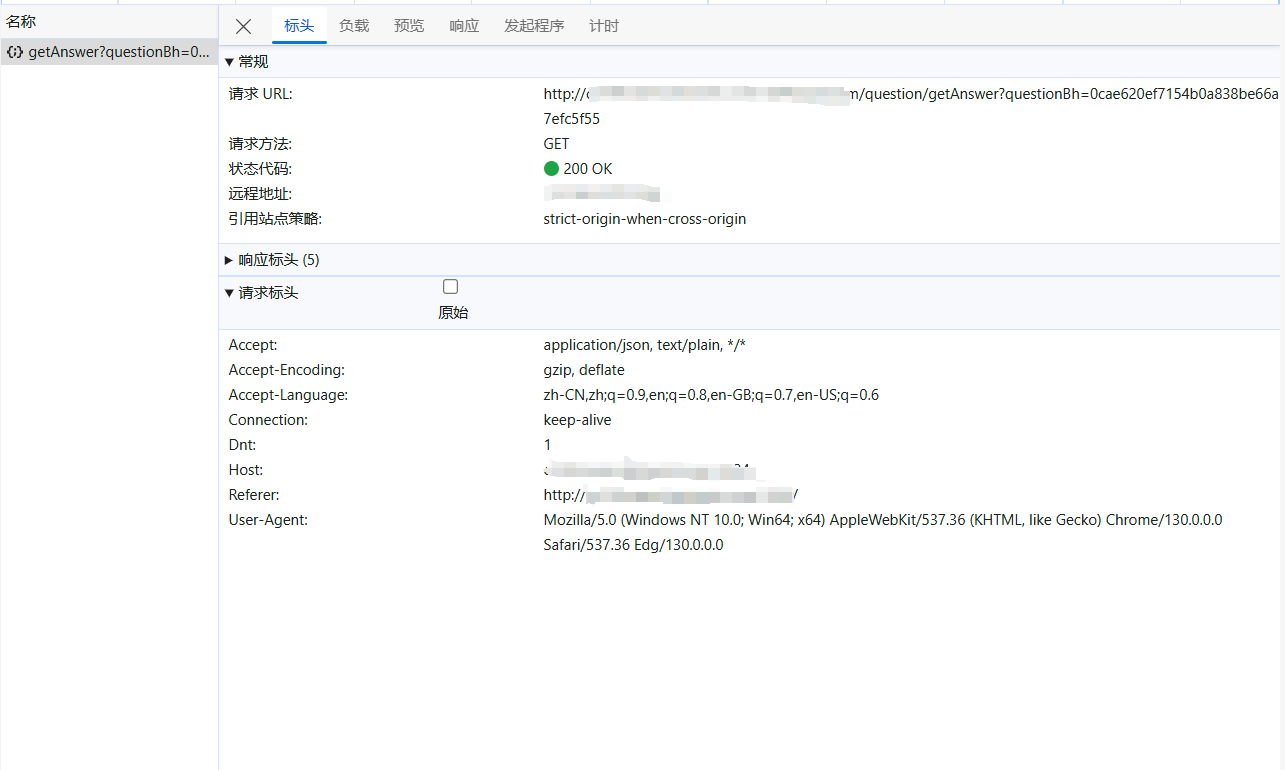

get

import requests

query= input(“输入你想搜索的东西\n”)

url = f’https://www.sogou.com/web?query={query}‘

headers = {

“user-agent”:”Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/130.0.0.0 Safari/537.36 Edg/130.0.0.0”

}

resp = requests.get(url,headers=headers)

print(resp)

print(resp.text)

resp.close()

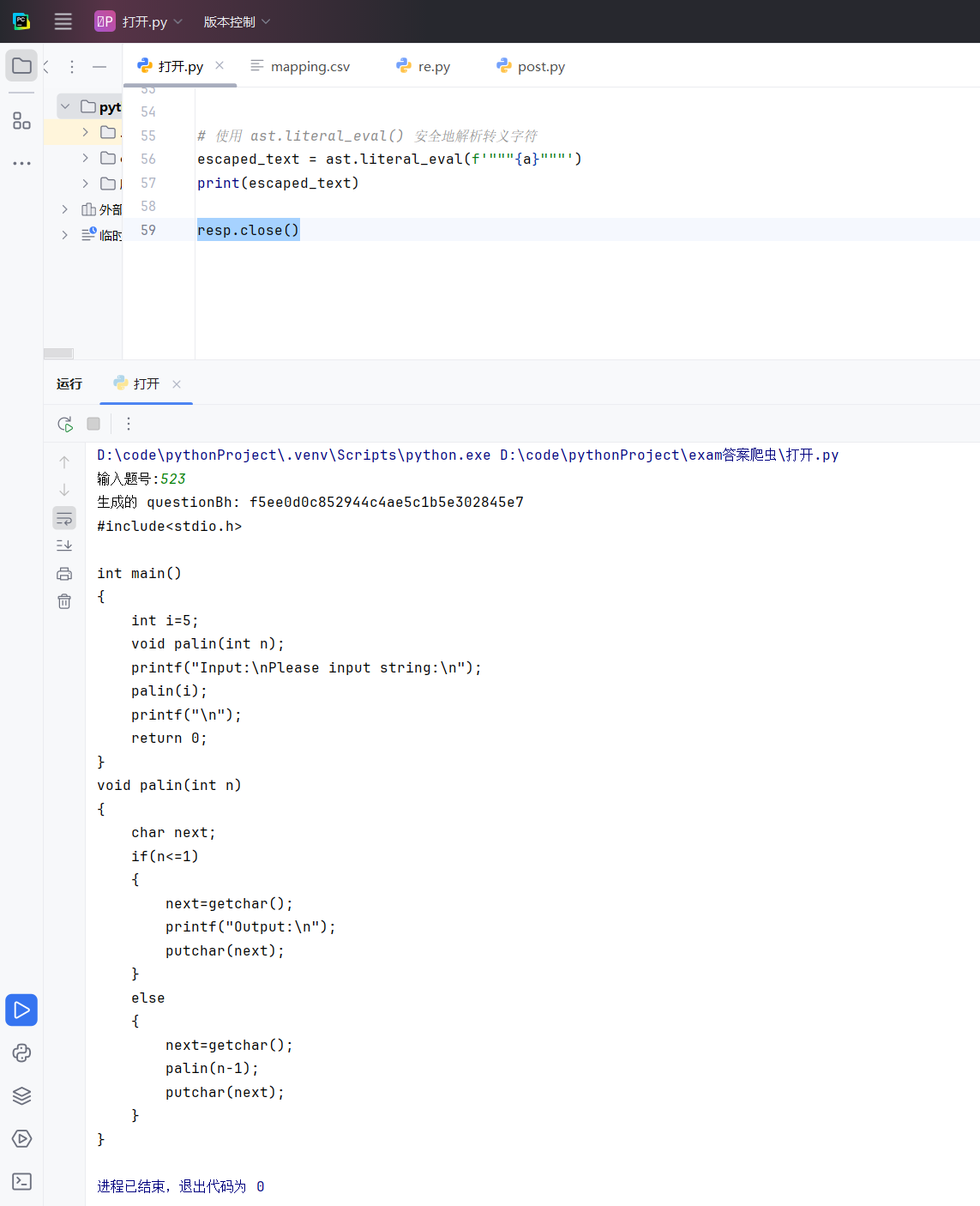

post

import requests

url = “https://fanyi.baidu.com/sug“

word=input(“输入想翻译的单词”)

dat={

“kw”:word

}

resp = requests.post(url,data=dat)

print(resp.json())

resp.close()

re

import re

import csv

findall 很小的文件可以用

lst=re.findall(r”\d+”,”my10086”)

print(lst)

finditer 迭代器?最常用

it=re.finditer(r”\d+”,”my10086”)

for i in it:

print(i.group())

search只找到一个结果就返回

#search返回是match对象,拿数据要.group()

lst=re.search(r”\d+”,”my10086”)

print(lst.group())

match必须从头匹配

s = re.match(r”\d+”,”my10086”)

print(s.group())

预加载正则表达式

obj = re.compile(r”\d+”)

it = obj.finditer(“1234”)

for i in it:

print(i.group())

it = obj.finditer(“5678”)

for i in it:

print(i.group())

查找限定组

obj = re.compile(“xxx.?xxx(?P.?)”,re.S)

result = obj.finditer(s)

#取所有组

for i in result:

print(i.group())

#取某个组 #(?P正则)

for i in result:

print(i.group(“name”))